Databricks is an Enterprise Software company founded by Apache Spark's creators. It is known for combining the best of Data Lakes and Data Warehouses in a Lakehouse Architecture. In addition, it provides its users with a comprehensive suite of High-Level APIs focused on Application Development.

This blog talks in detail about leveraging Databricks Spark for your business use case and improving your workflow efficiency.

Databricks is a cloud-based Data platform fueled by Apache Spark. Databricks is created to make Big Data and AI simple for organizations. Databricks offers a Unified Data Analytics Platform, which is an online environment where data practitioners can collaborate on data science projects and workflows.

Spark was released by Apache Spark Corporation to improve the speed of the Hadoop computational computing software process. Spark is used for big data processing with built-in modules. It's the de-facto standard unified analytics engine and the largest open-source project in the data processing.

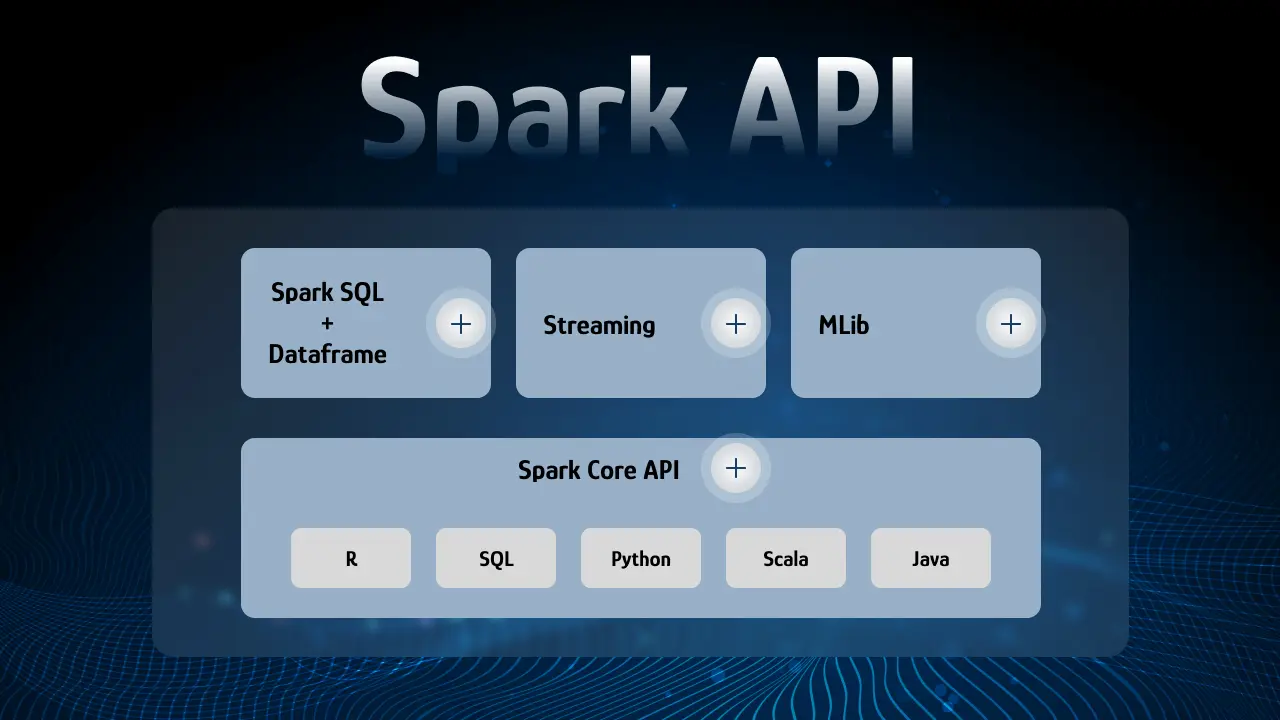

This technology was created by the founders of Databricks. It is a unified API and engine for SQL queries, streaming data, and machine learning. Spark can be seamlessly combined with other tools to create complex workflows.

Spark uses clusters of machines to process big data by splitting large tasks into smaller ones and distributing the work among several machines. Let's look at how spark executes a spark application. The secret to spark's performances is parallelism. This parallelized action is referred to as a job. Each job is broken down into stages.

Each job is broken down into several stages, a set of ordered steps that accomplish a job together. Tasks are created by the driver and assigned a section of data to process. These are the smallest unit of work.

The three key apache spark interfaces are Resilient Distributed Dataset, Data Frame, and Dataset.

RDD - RDD is an interface to a sequence of data objects consisting of one or more types located across a collection of machines. The RDD API is available in Java, Python, R, and Scala language.

Dataframe - This is a similar concept to data frames in pandas python. The Data Frame API is available in Java, Python, R, and Scala language.

Dataset - A combination of DataFrame and RDD. It provides the typed interface that is available in RDDs while providing the convenience of the DataFrame.

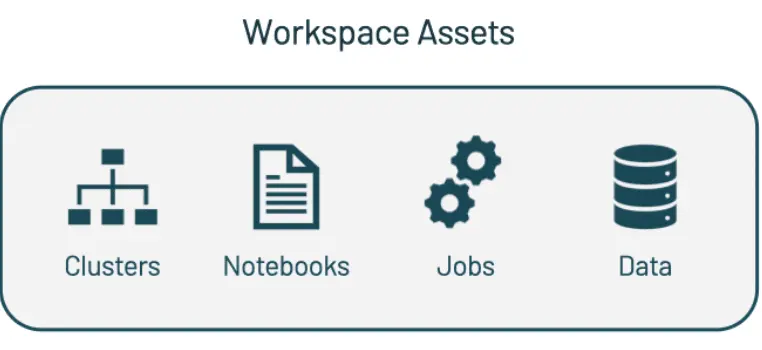

In the Databricks workspace, we can access all of our Databricks assets. The Databricks workspace organizes objects like notebooks, libraries, and experiments into folders providing access to data and provides access to computational resources, such as clusters and jobs.

Also, for the big data pipeline, the raw or processed data is ingested into Azure via Azure Data Factory in batches. The data also gets streamed near real-time using Apache Kafka, Event Hub, or IoT Hub, and the data lands in a data lake for long-term storage, in Azure Blob Storage or Azure Data Lake Storage. Moreover, As part of your analytics workflow, users can also use Azure Databricks to read data from multiple data sources.

Clusters are sets of computational resources that are configured in different ways to run data engineering, data science, and data analytics workloads. We run these workloads as a set of commands in a notebook or as an automated job. You can set different configurations for each cluster, including the version of Databricks Runtime.

Most of the work done in the Workspace is done via Databricks notebooks. Each notebook is a web-based interface composed of a group of cells that allow you to execute coding commands. Databricks notebooks are unique in that they can run code in a variety of programming languages, including Scala, Python, R, and SQL, and can also contain markdown.

Once you add your code to a notebook, a job allows you to run your notebook either immediately or on a scheduled basis. The ability to schedule notebooks to run daily or weekly allows data analysts to generate reports and BI dashboards without manual involvement programmatically.

The data you see in your Databricks Workspace is stored in external sources. Through the Databricks File System (DBFS), Databricks uses optimized access patterns and security permissions to access and load the data you need. Because of this, no data migration or duplication is needed to run analytics from Databricks.

This blog talks about the different aspects of leveraging Apache Spark with Databricks Datasets and DataFrames. It also briefly introduces the features of Apache Spark and Databricks. Extracting complex data from a diverse set of data sources can be tough, and this is where Kockpit Analytics comes into the picture! Kockpit offers a faster way to move data from several Data Sources or SaaS applications into your Data Warehouses like Databricks to be visualized in a BI tool of your choice.

Discover the most interesting topic