Stay updated on the newest technologies, recent developments, and latest trends in Analytics and BI

Now you can enjoy the quick and hassle-free configuration of your workspaces with Kockpit’s pre-configured images. Airflow is a virtual machine image (VMI) that allows you to set up your machines within minutes.

In this Azure Market Place Image, we have packed an airflow container in a virtual machine. The Airflow Dags are stored in the /usr/local directory. Here, You can create and modify your python dags with no hassle whatsoever.

Airflow’s rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed. It connects with multiple data sources and can send an alert via email or Slack when a task completes or fails. Airflow is distributed, scalable, and flexible, making it well-suited to handle the orchestration of complex business logic.

The distribution of Airflow is based on Linux and is provided by Kockpit Analytics Pvt. Ltd. Airflow Container is designed for Production Environments on Azure.

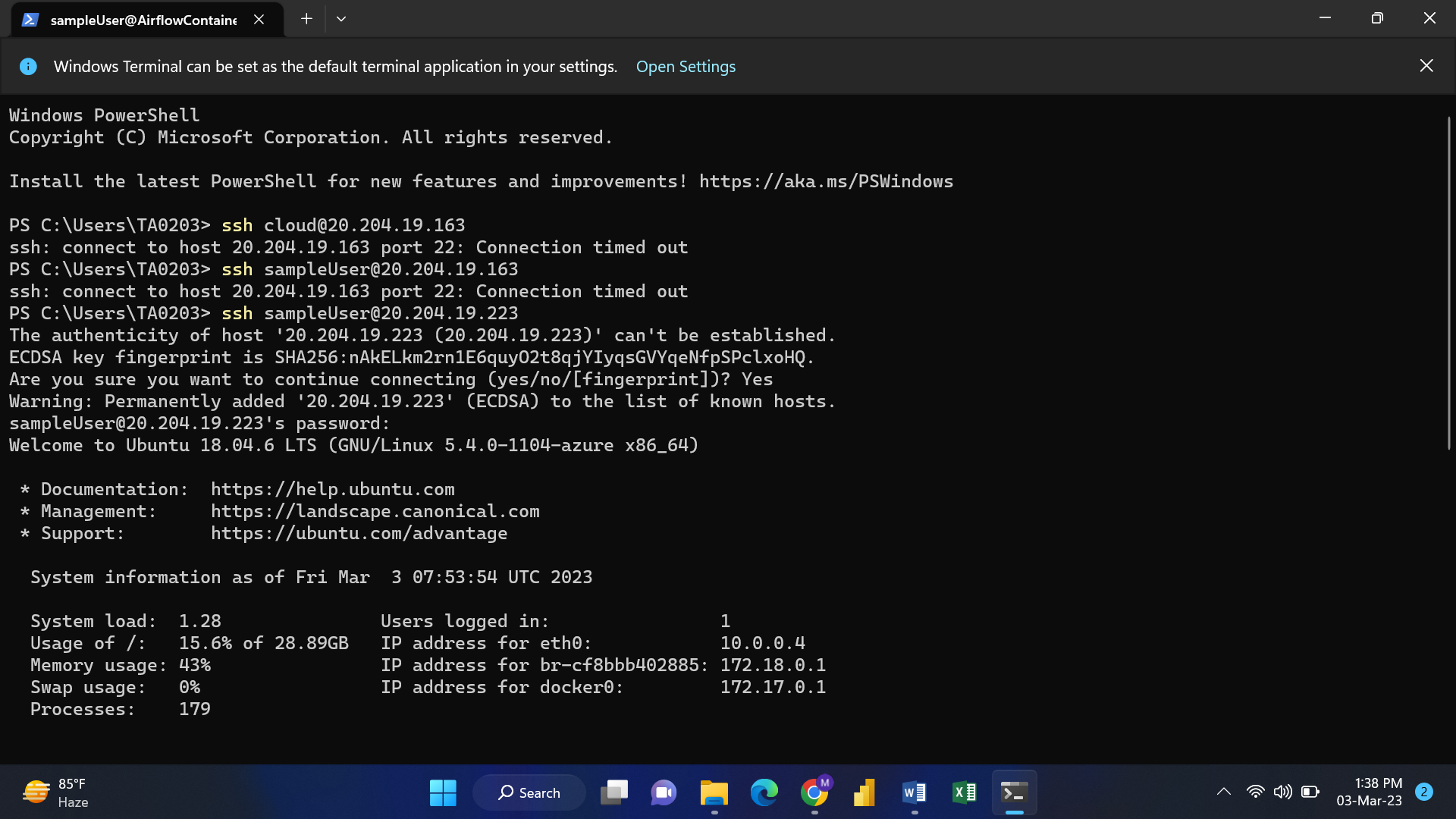

Log in to your Azure VM using SSH. Type ssh username@IP Address and then enter the password.

Example: ssh sampleUser@29.204.19.223

Enter the password then your VM will be logged In.

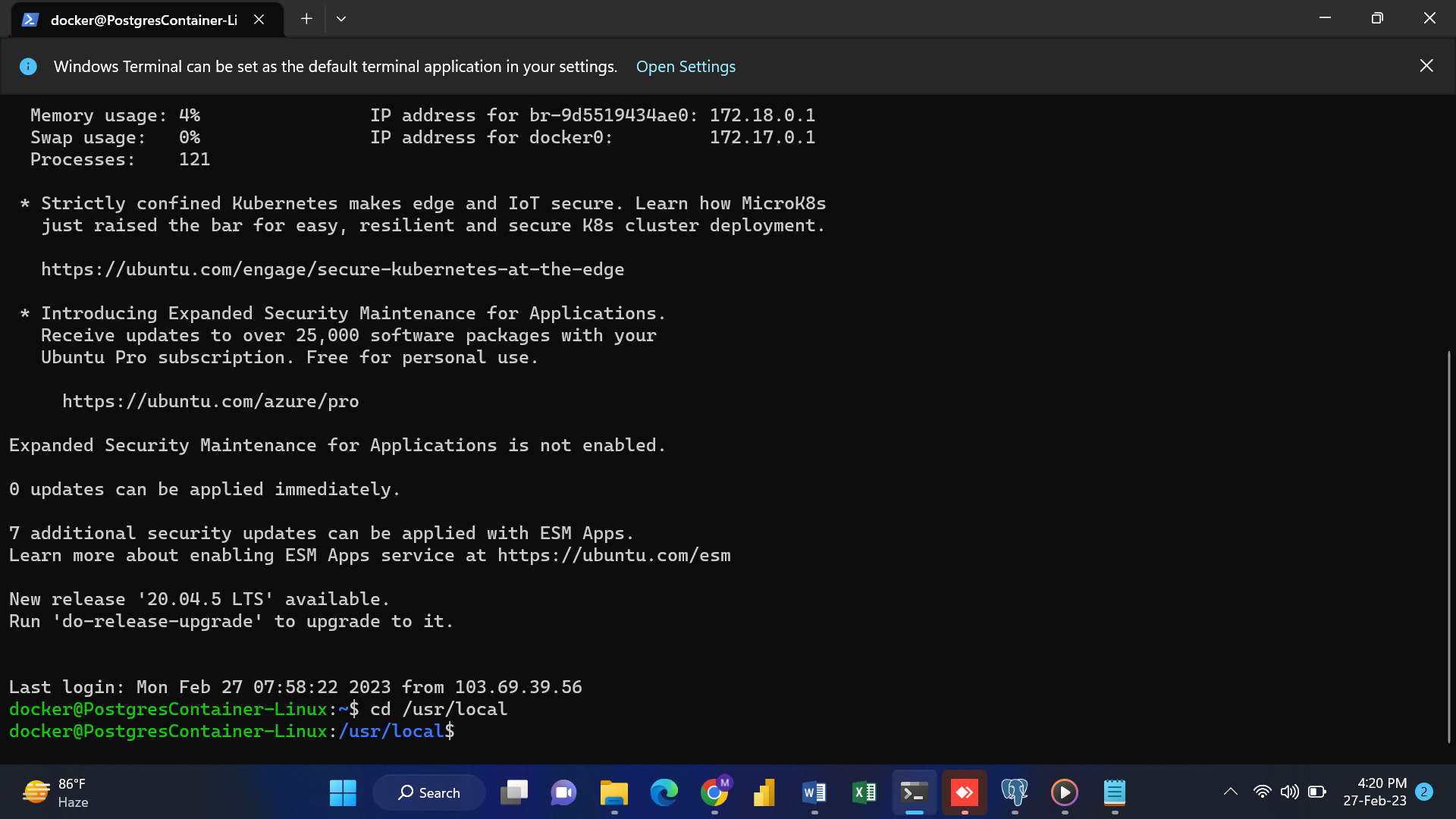

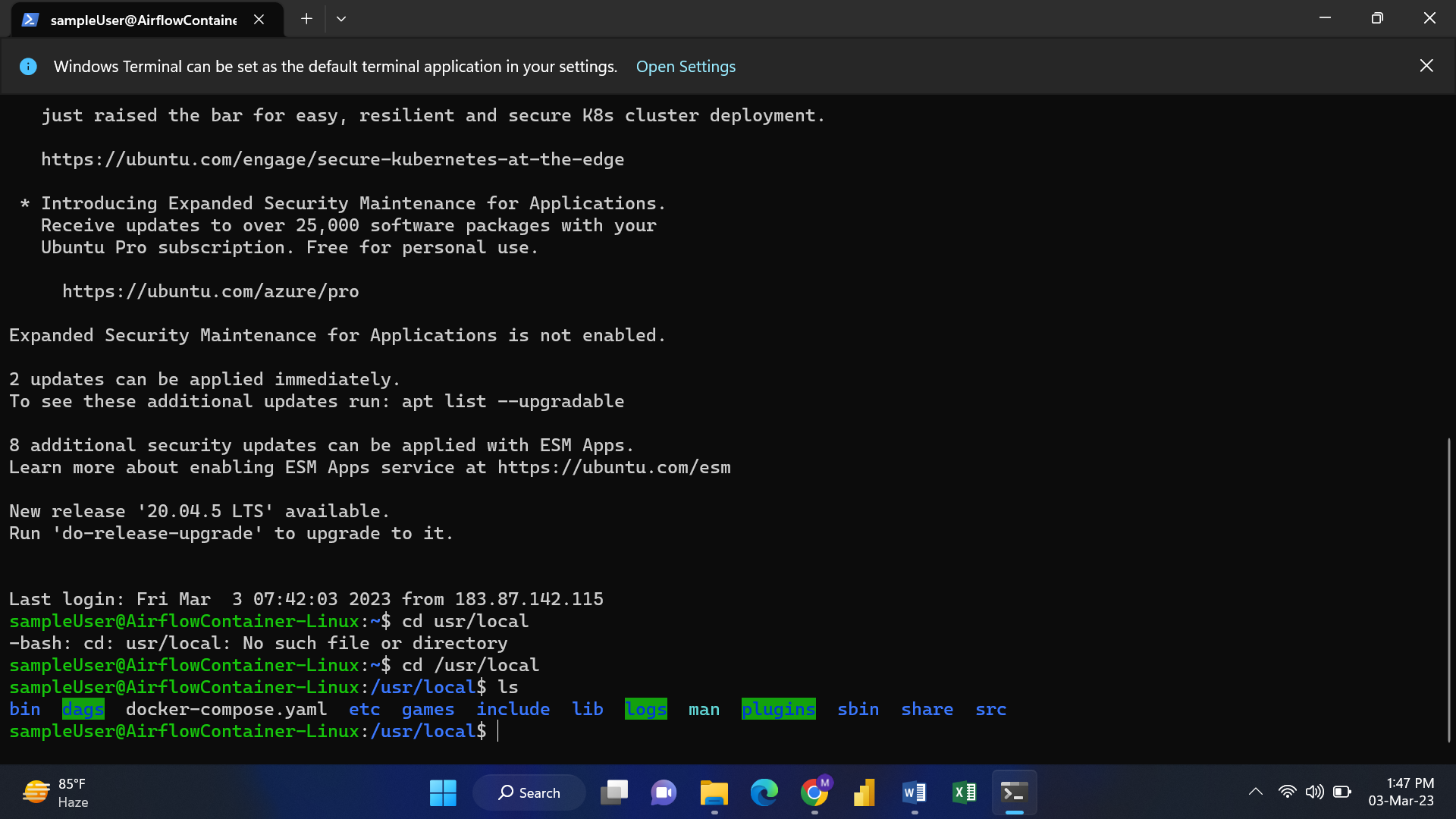

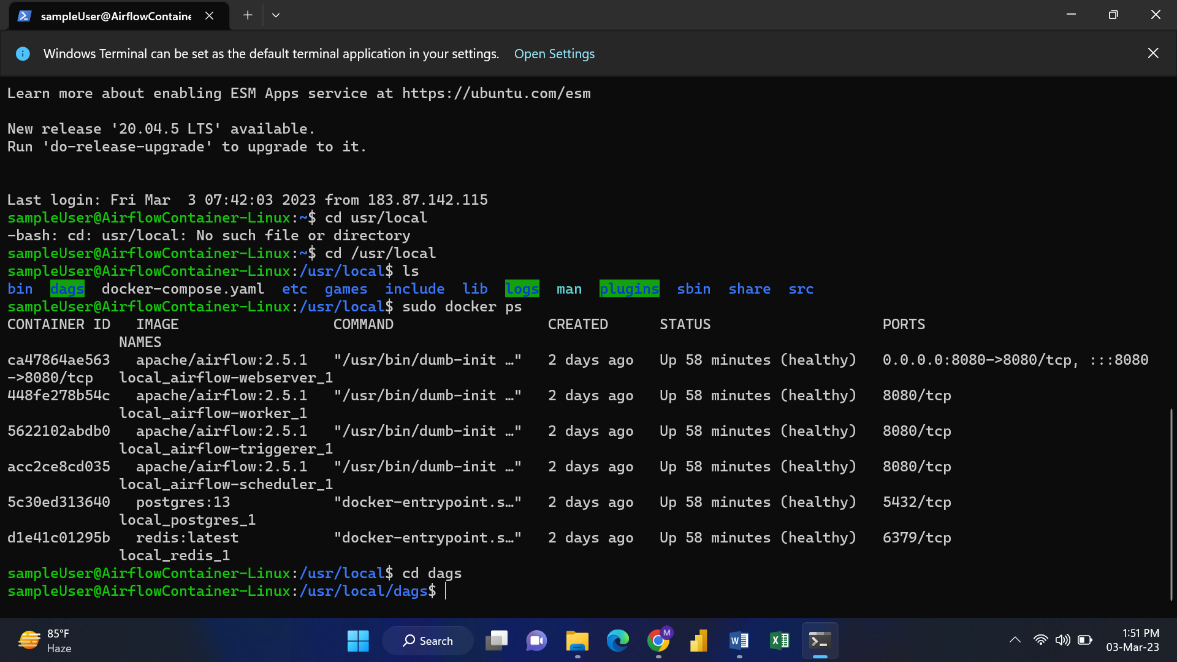

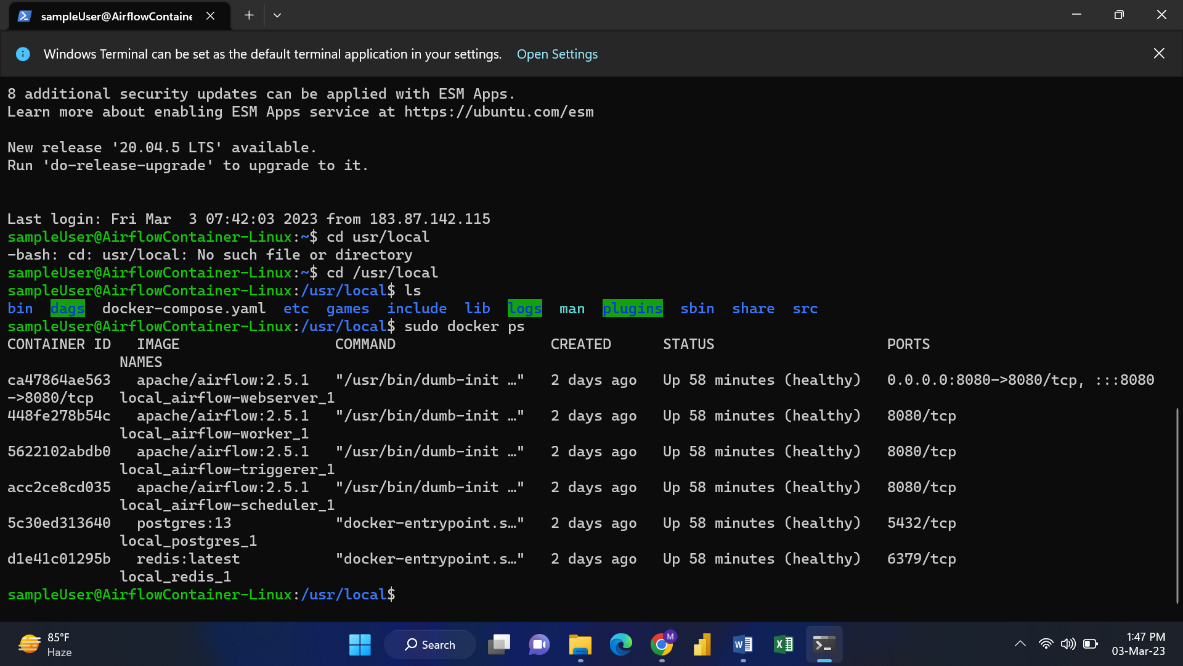

After login into your VM, Change your directory to user local.

Command: cd /usr/local

Now, type the “ls” command to get a list of files.

To check your active containers, type the ”sudo docker ps”.

Type the “cd /usr/local/dags” to go into the dags of your current directory( /usr/local).

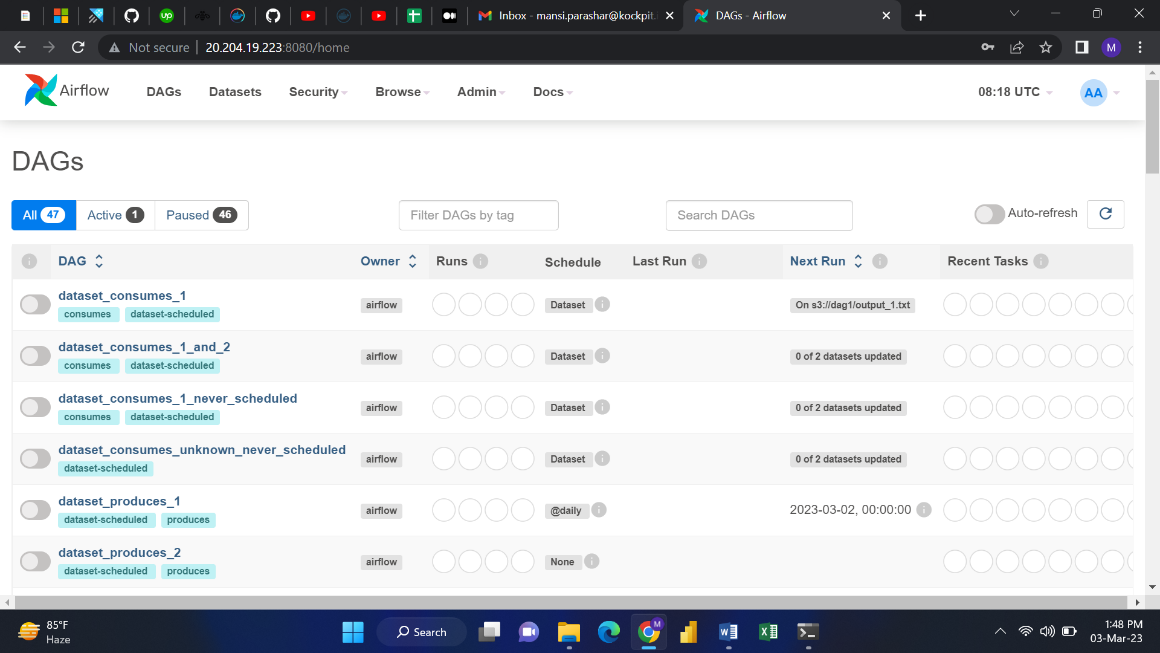

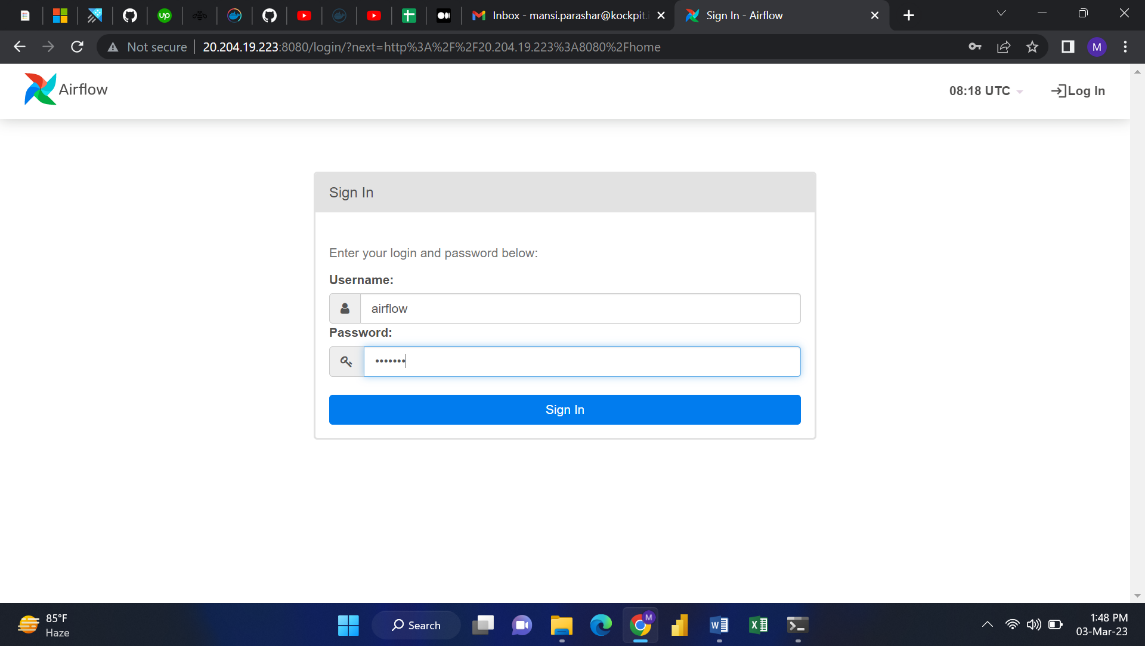

Go to the browser and enter the IP Address of the VM and port number, which is 8080.

Example: 20.204.19.223:8080

Enter the required details like Username and Password.

User Name: airflow

Password: airflow

After login, you will be directed to the Airflow Home page.